rich guy with friends

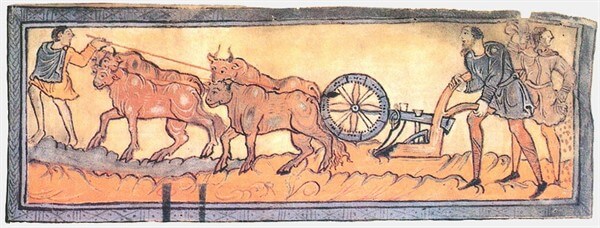

Imagine you are a rich farmer in the middle ages. Life is good and even though you have the most land to plough, you’re always the first to plant the seeds, because your loyal oxen do the hard work for you.

Now, not everybody is that lucky. Your neighbors are still using their bare hands and feet and working all day in the heat is wearing them down.

Being such a good neighbor, you decide to help them and offer your oxen as a service and now everyone is winning: they get more land prepared for crops and you collect a small fee for lending them the oxen.

Scaling

The business is going well and now the villagers are queueing at your door for a time slice with your beasts.

Would you replace your ox with a bigger one to get more work done? Sure, it will work for a while, but oxen can only get that big, right?

In application architecture, this approach is called scaling up. As processing demand increases, you add more resources to your server or even replace it with a better one.

Scaling out is another approach to application scaling. More servers are added to meet an increase in processing demand and work is split among them.

Not all applications can scale both ways. Scaling out works for applications that can split work into independent subtasks, while scaling up works for applications that have to perform related tasks.

Adding more oxen allows you to plough more fields at the same time, while having the fastest ox allows you to finish a field faster. Ideally, you’d want to have more fast oxen.

Costs

Scaling up has the benefits of low maintenance costs because the application never gets touched.

Administrative tasks are simple: any upgrades or patches for your application or the infrastructure (OS, servers) get deployed to a single instance and errors are investigated in a single place. You really love your admins, right?

Hardware costs may, however, get prohibitive. It’s easy to add more memory or CPUs when slots are available, but after a while you’ll have to start replacing parts and throwing away the old ones. Some components don’t support mix and match, so you have to replace them all at once.

Then there’s the snowballing effect: more CPU leads to more power needed, better cooling is required and the operational costs only go up.

Scaling out allows you to keep using old components. New servers are added on demand and servers are spin off when the peak of demand passed, eliminating wasted energy.

Efficiency

Let’s consider just three resources that can be improved: CPU, RAM and HDD. Any increase in the three resources translates to some benefit for your application, but the challenge is to make your application scale effectively.

In order to take advantage of additional CPUs or cores, your application needs to be able to split the tasks it performs in similar subtasks that can be performed in parallel. CPU frequency only benefits applications that are CPU bound (compute intensive) such as signal processing, movie compression or chess AI.

An increase in RAM size benefits applications that handle large amounts of data at the same time, but has little impact when only small data is involved.

Hard drives speed and raid configurations impact applications that make frequent data reads/writes to a persistent medium, such as database servers.

While considering scaling up, you should also look at the way your infrastructure scales up. Some operating systems or servers are restricted to a number of processors/amount of RAM and, after all, it’s not enough to add more memory if your application can’t access it.

Single point of failure

I’m coming back to the ploughing analogy with sad news: one of your oxen just died and the other one can’t handle the yoke alone. How do you make sure your oxen live forever?

This is probably the most important aspect of a critical enterprise application: stability. If your application crashed, it makes little importance how fast the server is or how much RAM it has.

I can’t name from the top of my head an application that never crashed. Even if it was the OS crashing and dragging the application with it or bugs in the application itself, software uptime is never 100%, but still, strategies need to be in place to cope with it.

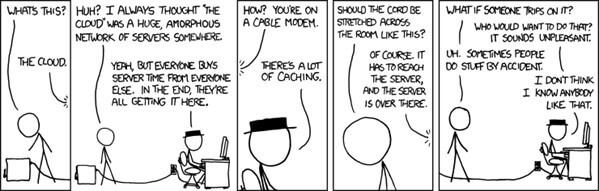

image courtesy of xkcd

The strategy I found to be the most effective is to embrace the crashes. Count on them to happen and make sure your application crashes gracefully. No work should be left in limbo, no other system functions should be disrupted and enough information to diagnose the crash should be persisted.

Downtime doesn’t have to be related to crashes, but can also be an effect of application maintenance and in a scale out scenario servers can be taken offline and serviced as required, without disrupting the whole application.

In the next posts I’ll look more into how we approached scaling up and out with FinTP and also crash recovery strategies.

by: Horia Beschea